Virtual Assistant

With a virtual assistant, it is possible to talk to several bots in one conversation and to create pipeline steps to allow a variety of features such as redaction and translation, among other conversational features.

A Virtual Assistant has the following components:

- NLU Engine: NLP used for bot classification. NLU Config

- Dispatcher: Manages orchestration of a multi bot configuration.

- Bot Array: A set of bots that a dispatcher can route user input to.

- Pipelines: A series of steps than can be done as messages are entering (IngressPipeline) or leaving (EgressPipeline) the ServisBOT system

- Events: Actions tied to non-input related triggers such as session starts, or missed inputs

- Endpoints: A default endpoint is created automatically when creating a virtual assistant. For additional endpoints make sure the target reference type is set to

va.

Example VA Configurations

{

"Name" : "MoneyTransferVa",

"Description": "A virtual assistant to aid monetary transfers",

"DefaultBotId": "info-bot",

"Bots" : [

{

"Type": "BOTARMY",

"Id": "info-bot",

"Enabled": true,

"Properties": {

"Name": "info-bot"

}

},

{

"Type": "BOTARMY",

"Id": "info-bot",

"Enabled": true,

"Properties": {

"Name": "transfer-bot"

}

}

],

"NluEngines": [

{

"Id": "TestVADispatcher",

"Type": "BOTARMY",

"Properties": {

"Secret": "srn:vault::acme:aws-cross-account-role:awscontent-lex-x-role",

"Nlp": "Lex"

}

}

],

"IngressPipeline": [

{

"Id": "CreditCardRedact",

"Type": "REGEX_REDACT",

"Properties": {

"Regex": "(?:5[1-5][0-9]{2}|222[1-9]|22[3-9][0-9]|2[3-6][0-9]{2}|27[01][0-9]|2720)[0-9]{12}"

}

},

{

"Id": "HumanHandoverStep",

"Type": "HUMAN_HANDOVER",

"Properties": {

"Regexes": [".*human.*"],

"BotName": "connectshowcase",

"Messages": {},

"OnJoin": [{"Type": "message","Message": "Hello" }],

"OnLeave": [ {"Type": "message","Message": "good bye"}]

}

}

],

"EgressPipeline": [],

"Events": {

"@SessionStart": [

{

"Id": "sessionStartMessage",

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "Welcome to the transfer VA. Type 'help' for more info about what I do, or 'transfer' to make a monetary transfer."

}

},

{

"Id": "sessionStartMarkup",

"Type": "SEND_MARKUP",

"Properties": {

"Markup": "<TimelineMessage>\n <List title=\"Please select a number\" selectable=\"true\" interactionType=\"event\">\n <Item title=\"Option one is here\" id=\"1\"/>\n <Item title=\"Option two is here\" id=\"2\"/>\n <Item title=\"Option three is here\" id=\"3\"/>\n <Item title=\"Option four is here\" id=\"4\"/>\n </List>\n</TimelineMessage>",

"Context": {}

}

}

],

"@MissedInput": [

{

"Id": "missedInputMessage",

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "Sorry, I didn't quite get that, could you phrase that differently?"

}

}

]

},

"Persona": "ReportBOT",

"Tags": []

}

VA Properties

- Name: The name of the VA (Required)

- Description: A description of the VA (Optional)

- DefaultBotId: The ID of a bot to assign to the VA by default (Optional)

- Bots: An array of bots that the VA manages (Required, minimum of 1 bot)

- Type: The Bot type. Supported types are MOCK, BOTARMY (Required)

- Id: A custom ID which is used to refer to this bot elsewhere in the VA config (Required)

- Enabled: Controls whether a bot can be routed to by the VA or not

- Properties: Other properties (Required)

- Name: The name of the bot being referred to.

- NluEngines: The NLU engine used to parse user input, allowing for natural language recognition. (Required)

- Id: A custom ID used to refer to this NLU engine elsewhere in the VA config. (Required)

- Type: The NLU engine type. Supported types is Lex. (Required)

- Handlers: An array of different actions triggered by regex (Required)

- IngressPipeline: A series of steps that occur when a message is sent to the VA. The steps here can include redaction for masking sensitive information, language translation, and more. (Optional)

- EgressPipeline: The same as the IngressPipeline, just with messages that are sent out from the VA. (Optional)

- Events: An array of events that trigger actions. (Optional) Supported events are:

- @SessionStart: Triggers at the beginning of a conversation.

- @MissedInput: Triggers when an incoming event cannot be understood and/or acted upon by a Bot or NLU engine.

- @Exception: Triggers when a transient error occurs.

- Persona: Sets the appearance of the bot in Portal (Optional)

- Tags: Arbitrary tags for categorizing your VA. (Optional)

Supported NluEngines

- Lex

- LexV2

- ServisBOT

Lex NluEngine Example

{

"Id": "TestVADispatcher",

"Type": "BOTARMY",

"Properties": {

"Secret": "srn:vault::acme:aws-cross-account-role:awscontent-lex-x-role",

"Region": "eu-west-1",

"Nlp": "Lex"

}

}

- Secret - (Required) The ServisBOT Secret SRN which references the Lex cross-account role secret.

- Region - (Required) The AWS Region where the Lex Bot will be used.

LexV2 NluEngine Example

{

"Id": "TestVADispatcher",

"Type": "BOTARMY",

"Properties": {

"Secret": "srn:vault::acme:aws-cross-account-role:awscontent-lex-x-role",

"Region": "eu-west-1",

"Locale": "en_US",

"Nlp": "LexV2"

}

}

- Secret - (Required) The ServisBOT Secret SRN which references the LexV2 cross-account role secret.

- See here for creating LexV2 cross-account roles

- Region - (Required) The AWS Region where the LexV2 Bot will be used.

- Locale - (Required) The locale to use when creating, updating and communicating with a lex V2 Bot, e.g “en_US”, for a full list of supported Lex V2 locales see here.

ServisBOT NluEngine Example

{

"Id": "TestVADispatcher",

"Type": "BOTARMY",

"Properties": {

"Nlp": "ServisBOT"

}

}

Pipeline steps

Below is a list of support pipeline steps. They can be in any of following

- IngressPipeline

- EgressPipeline

- Events

- @SessionStart

- @MissedInput

- @Exception

Supported Types

- ExecuteApi

- ExecuteFlow

- Genetic Http

- GoogleLanguageDetect

- GoogleTranslate

- Human handover

- Named Entity Recognition

- QnaMaker

- Redaction

- Regex Raise Event

- Secure Session

- SendContent

- SendHostNotification

- SendMarkup

- SendMessage

- Compound

- Knowledge Base

- Assign Bot

ExecuteApi

You can configure a api connector using the following

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "EXECUTE_API_CONNECTER",

"Properties": {

"ApiConnecter": "ApiConnecterName",

"OnError": "@EventNameHere"

}

}

]

}

ExecuteFlow

You can configure a pipeline to invoke a flow using the following

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "EXECUTE_FLOW",

"Properties": {

"FlowId": "Flow-UUID"

}

}

]

}

Genetic Http

You can configure a generic http request using the following

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "GENERIC_HTTP",

"Properties": {

"Url": "https://urltohit.com",

"OnError": "@EventNameHere"

}

}

]

}

GoogleLanguageDetect

You can configure a pipeline to do google language detection

{

"IngressPipeline": [

{

"Id": "GoogleLanguageDetect",

"Type": "GOOGLE_LANGUAGE_DETECT",

"Properties": {

"ApiKey": "ApiKey"

}

}

]

}

GoogleTranslate

You can configure a pipeline to do google translate on the incoming/outgoing message

{

"IngressPipeline": [

{

"Id": "GoogleTranslate",

"Type": "GOOGLE_TRANSLATE",

"Properties": {

"ApiKey": "ApiKey",

"TargetLanguageSource": "CONFIGURATION",

"TargetLanguage": "en"

}

}

]

}

Human handover

The human handover pipeline can be used to assign the VA to an agent to take over the conversation, currently only Amazon Connect, Genesys and Edgetier are supported as human handover type

{

"IngressPipeline": [

{

"Id": "HumanHandoverStep",

"Type": "HUMAN_HANDOVER",

"Properties": {

"Regexes": [".*human.*"],

"BotName": "connectshowcase",

"Messages": {},

"OnJoin": [{ "Type": "message", "Message": "Hello" }],

"OnLeave": [{ "Type": "message", "Message": "good bye" }]

}

}

]

}

The human handover can have a set of OnJoin and OnLeave events. These follow the same schema as the actions inside of intents.

Supported event types

Message

{

"Type": "message",

"Message": "Hello"

}

Markup

{

"Type": "markup",

"Markup": "<TimelineMessage><List type=\"disc\" selectable=\"true\" interactionType=\"utterance\"><Item title=\"Item one\" id=\"1\"/><Item title=\"Item two\" id=\"2\" /></List> </TimelineMessage>"

}

Content

{

"Type": "content",

"Value": "menu"

}

Host notification

{

"Type": "hostnotification",

"Notification": "SB:::UserInputDisabled"

}

Named Entity Recognition

To use named entity recognition you can use the following ingress pipeline:

{

"IngressPipeline": [

{

"Id": "Named entity recognition",

"Type": "NAMED_ENTITY_RECOGNITION",

"Properties": {}

}

]

}

If entities are detected on ingress of any message, they will be located in context, under the property state.va.entities.

In flow, this property is referenced msg.payload.context.state.va.entities. In intent fulfilment, this property can be referenced using state.va.entities.

Here is an example entity payload:

{

"va":{

"entities":[

{

"utteranceText":"Dublin",

"entity":"Countries, cities, states",

"start":22,

"end":28,

"len":6

}

]

}

}

QnaMaker

To use QNA maker you can use the following

{

"IngressPipeline": [

{

"Id": "QnaMakerStep",

"Type": "QNA_MAKER",

"Properties": {

"Url": "...",

"EndpointKeySecret": "srn:vault::myorg:secret:my-endpoint-key",

"FallbackMessage": "This message will be used if qna maker does not have an answer",

"ConfidenceThreshold": 80

}

}

]

}

Redaction

Redaction can be configured as part of an ingress pipeline. As shown above, the following configuration will prevent credit card numbers from being persisted in the conversation history.

{

"IngressPipeline": [

{

"Id": "CreditCardRedact",

"Type": "REGEX_REDACT",

"Properties": {

"Regex": "(?:5[1-5][0-9]{2}|222[1-9]|22[3-9][0-9]|2[3-6][0-9]{2}|27[01][0-9]|2720)[0-9]{12}"

}

}

]

}

The ingress pipeline can contain one or many REGEX_REDACT entries.

Regex Raise Event

To add a regex raise event you can use the following

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "REGEX_RAISE_EVENT",

"Properties": {

"Regex": ".*Custom*",

"Event": "@CustomEvent"

}

}

]

}

Secure Session

Secure Session can be configured as part of an ingress pipeline. As shown above, the following configuration will have secure session running with a check every 60 seconds

{

"IngressPipeline": [

{

"Id": "SecureSession",

"Type": "SECURE_SESSION",

"Properties": {

"SecureSessionType": "API_CONNECTER",

"ApiConnecter": "SecureSession",

"ValidationInterval": 60

}

}

]

}

- “SecureSessionType” currently only supports “API_CONNECTER”

- “ApiConnecter” is the alias of any ApiConnecter.

- “ValidationInterval” is how often to validate against the API connector on message ingress

When SecureSession is in use it will fire a “@SecureSessionUnauthorized” event when the ApiConnecter returns a non 2xx response

{

"Events": {

"@SecureSessionUnauthorized": [

{

"Id": "referenceId",

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "SecureSession check failed"

}

}

]

}

}

SendContent

To send CMS content

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "SEND_CONTENT",

"Properties": {

"Key": "Content_key"

}

}

]

}

SendHostNotification

You can configure the pipeline to send a host notification message using the following config

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "SEND_HOST_NOTIFICATION",

"Properties": {

"Notification": "Notification"

}

}

]

}

SendMarkup

You can configure the pipeline to send a markup message using the following config

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "SEND_MARKUP",

"Properties": {

"Markup": "<TimelineMessage><TextMsg>Hello ${name}</TextMsg></TimelineMessage>",

"Context": {

"name": "world"

}

}

}

]

}

SendMessage

You can configure the pipeline to send a message using the following config

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "hello from the pipeline"

}

}

]

}

Compound

You can configure the pipeline to identify compound utterances using the following config

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "COMPOUND",

"Properties": {

"CompoundMessage": "I see you need help with several things",

"UserSelectionConfig": {

"UserSelectionPolicy": "MANUAL|AUTOMATIC",

"MarkupType": "List|SuggestionPrompt|ButtonPromptContainer",

"OnSelectedNoneActions": [

{

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "You have selected none"

}

},

{

"Type": "SEND_MARKUP",

"Properties": {

"Markup": "<TimelineMessage><TextMsg>Try rephrasing your message</TextMsg></TimelineMessage>",

"Context": {}

}

}

]

}

}

}

]

}

See here for more details on the possible configuration values.

Knowledge Base

For KNOWLEDGE_BASE we currently only support Kendra, below is an example of kendra This is recommend for use only within the fallback pipeline

{

"Egress": [

{

"Id": "KB-for-fallback",

"Type": "KNOWLEDGE_BASE",

"Properties": {

"SearchService": {

"Name": "Standard-Index",

"Type": "Kendra",

"Secret": "srn:vault::ORG:aws-cross-account-role:knowledgebase-query",

"MaxQueryTime": 1500, // optional, timeout before ending the search query

"ErrorMessageGeneral": "An error occurred using Kendra.", // message egressed to the user when we hit an error

"ErrorMessageMaxQueryTime": "The query ran longer than expected. Please try another search." // used when we is the max query timeout

},

"Config": {}

}

}

]

}

Kendra Example

For optional Config values please refer to the docs here

{

"Id": "KB-for-fallback",

"Type": "KNOWLEDGE_BASE",

"Properties": {

"SearchService": {

"Name": "Standard-Index",

"Type": "Kendra",

"Secret": "srn:vault::ORG:aws-cross-account-role:knowledgebase-query",

"MaxQueryTime": 1500, // optional, timeout before ending the search query

"ErrorMessageGeneral": "An error occurred using Kendra.", // message egressed to the user when we hit an error

"ErrorMessageMaxQueryTime": "The query ran longer than expected. Please try another search." // used when we is the max query timeout

},

"Config": {

"IndexId": "8e2882ce-afb9-4eab-918d-12087266f2d7",

"PageSize": 5, // optional

"UserContextMapping": {}, // optional

"AttributeFilter": [], // optional

"QueryResultTypeFilter": "ANSWER", // optional,

"MinScoreAttribute": "LOW", // optional

"Region": "eu-west-1" // AWS region

}

}

}

See here for more details on the possible configuration values.

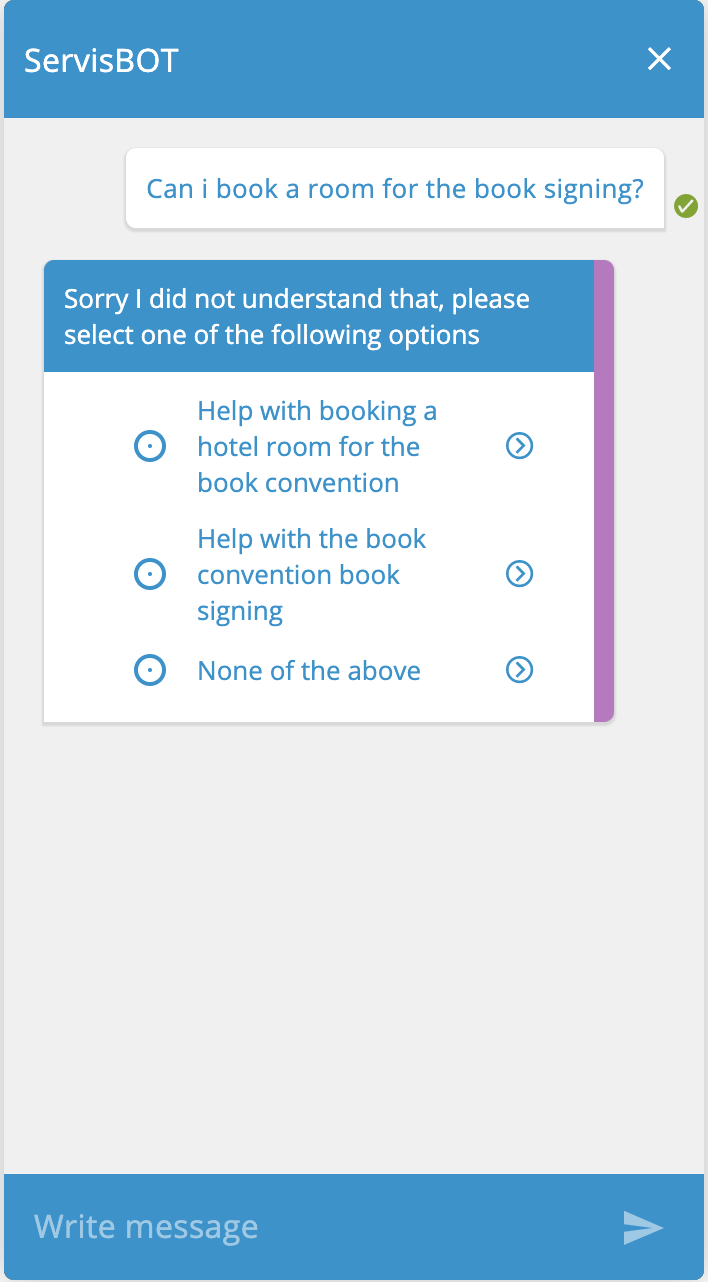

Disambiguation

Disambiguation is supported on the VA via configuration on the VA’s NluEngine.

Sample Disambiguation Configuration

{

"NluEngines": [

{

"Id": "assistant",

"Type": "BOTARMY",

"Properties": {

"Nlp": "ServisBOT",

"DisambiguationConfig": {

"IntentCombinations": [

{

"DisambiguationMessage": "Sorry I did not understand that",

"IntentCombination": [

{

"IntentAlias": "vacation_question",

"BotName": "SmallTalkBot"

},

{

"IntentAlias": "vacation_booking",

"BotName": "VacationBot"

}

],

"OnSelectedNoneActions": [

{

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "You have selected none."

}

},

{

"Type": "SEND_MARKUP",

"Properties": {

"Markup": "<TimelineMessage><TextMsg>Please ask me another question</TextMsg></TimelineMessage>"

}

}

]

}

]

}

}

}

]

}

IntentCombinations- A list of intent combination objects that you want to use to generate intents for disambiguation on the VA dispatcher.DisambiguationMessage- The message used to prompt the user to select an intent (this is the list title), this is optional, and defaults to ‘Sorry I did not understand that, please select one of the following options’IntentCombination- The intents to be used to form the intent to be used for disambiguation.BotName- The bot name that is associated with the intent alias. This must be a bot on the VA, and it must match the name of the bot in the VA configuration.IntentAlias- The intent alias to use from the bot. This intent must exist on the bot.

OnSelectedNoneActions- A list of actions to execute if the user selects none of the presented intents. These are also executed if the user types a response to the bot rather than interacting with the list of options. This is optional, and by default no actions will be executed. See Supported “OnSelectedNoneActions”

Disambiguation Supported “OnSelectedNoneActions”

The following actions are supported within the OnSelectedNoneActions configuration.

SEND_MESSAGE

{

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "You have selected none."

}

}

SEND_MARKUP

{

"Type": "SEND_MARKUP",

"Properties": {

"Markup": "<TimelineMessage><TextMsg>Please ask me another question</TextMsg></TimelineMessage>"

}

}

Disambiguation Supported Intent Fulfillment Actions

When a user selects an intent from the list of options, the VA will execute the fulfillment actions for the intent that was selected. Below is a list of the currently supported fulfillment actions when an intent is selected.

message

{

"type": "message",

"value": "hello"

}

markup

{

"type": "markup",

"value": "<TimelineMessage><TextMsg>hello</TextMsg></TimelineMessage>"

}

Note that the intent description is displayed to the user for each intent, at time of writing, setting an intent description is not supported on bulk API actions. If no description is present, the intent displayName is shown.

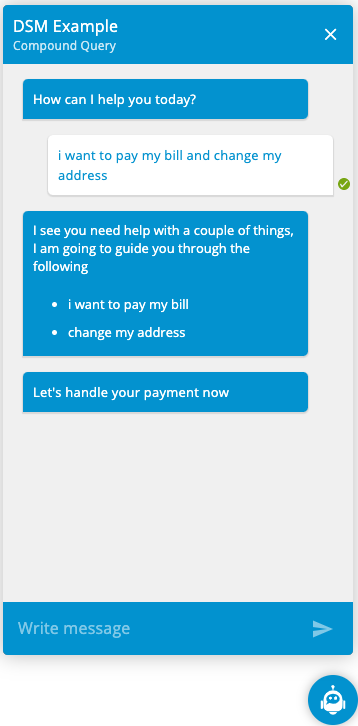

Handling Compound Utterances

What is a Compound Utterance?

A compound utterance is a single utterance provided by the user which contains several requests. For example a user might say “I want to pay my bill and change my address”.

In the example given above, the user has specified two requests “I want to pay my bill” and “change my address”, which is considered to be a compound utterance.

Sample Compound Step Configuration

To identify compound utterances in your VA you can configure a COMPOUND step on your VA ingress pipeline. A sample configuration can be found below.

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "COMPOUND",

"Properties": {

"CompoundMessage": "I see you need help with several things",

"UserSelectionConfig": {

"UserSelectionPolicy": "MANUAL|AUTOMATIC",

"MarkupType": "List|SuggestionPrompt|ButtonPromptContainer",

"OnSelectedNoneActions": [

{

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "You have selected none"

}

},

{

"Type": "SEND_MARKUP",

"Properties": {

"Markup": "<TimelineMessage><TextMsg>Try rephrasing your message</TextMsg></TimelineMessage>",

"Context": {}

}

}

]

}

}

}

]

}

CompoundMessage- The message used to inform the user that a compound utterance was identified. This is optional, and defaults to “I see you need help with a couple of things, I am going to guide you through the following”UserSelectionConfig- An object which contains the type of user selection that will occur when a compound utterance is identified. This is optional, and will default to AUTOMATIC mode if no configuration is provided. AUTOMATIC mode will be explained in more detail below.UserSelectionPolicy- The type of user selection policy that should be used when a compound utterance is identified. Possible values are AUTOMATIC or MANUAL. This value is optional, and defaults to AUTOMATIC. See here for more details on the differences between AUTOMATIC and MANUAL user selection policies.MarkupType- The type of markup you wish to use to render the identified individual utterances in the ServisBOT messenger. The possible values areListButtonPromptContainerSuggestionPrompt.- This will default to List if no value is provided. This option is only used for MANUAL selection mode, AUTOMATIC mode will always use a read-only List to render the utterances.

OnSelectedNoneActions- A list of actions to execute if the user selects none of the presented options. These are also executed if the user types a response rather than interacting with the list of options. This is optional, and by default no actions will be executed. This option is only used for MANUAL selection mode. See here for details on the supported actions.

Supported “OnSelectedNoneActions” for Compound Step

SEND_MESSAGE

{

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "You have selected none."

}

}

SEND_MARKUP

{

"Type": "SEND_MARKUP",

"Properties": {

"Markup": "<TimelineMessage><TextMsg>Please ask me another question</TextMsg></TimelineMessage>"

}

}

Differences between AUTOMATIC & MANUAL UserSelectionPolicies

Automatic Selection Mode

- The VA will assign the best suited bot for each individual utterance that it identifies.

- The VA will cycle through the bots in the order the utterances were identified.

- For example if the following compound utterance was given to the VA, “pay bill & change address”, and the VA selected the

PayBillBotfor the “pay bill” utterance and theChangeAddressBotbot for the “change address” utterance. Then the VA will communicate with the PayBillBot first, and then the ChangeAddressBot.

- For example if the following compound utterance was given to the VA, “pay bill & change address”, and the VA selected the

- The user will then be presented with a read-only list containing all of the individual utterances that were identified and informs the user that these utterances will be actioned.

- The VA will then begin communication with the first bot by forwarding the individual utterance that is associated with the first bot.

- When an intent is detected, there are currently 2 scenarios :

- Synchronous fulfillment - All the actions are completed and a

BotMissionDoneaction is executed once finished. This happens automatically, there is no need to add aBotMissionDoneaction to your intent. - Asynchronous fulfillment - This occurs when the fulfillment actions contain an action flow and the

BotMissionDoneis deferred until the action flow is complete. This happens automatically, there is no need to add aBotMissionDoneaction to your intent or flow.

- Synchronous fulfillment - All the actions are completed and a

- Once the VA receives the

BotMissionDone, it will move onto the next bot in the compound work flow, assuming there are still bots left to handle the compound utterance. - When all compound utterances are cycled and fulfilled, the VA’s assignment stack will be clear and waiting for a new user input.

Here is a sample of what the options look like when AUTOMATIC mode is being used.

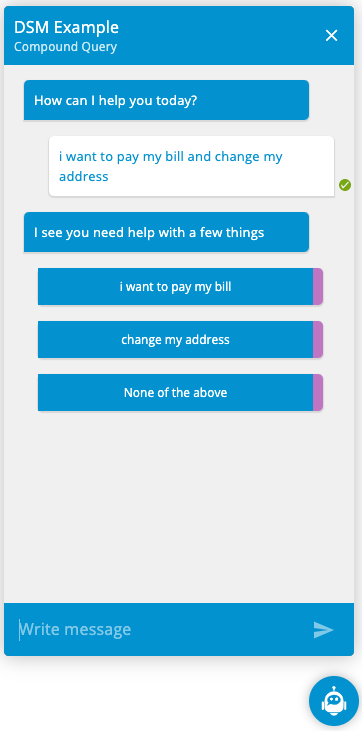

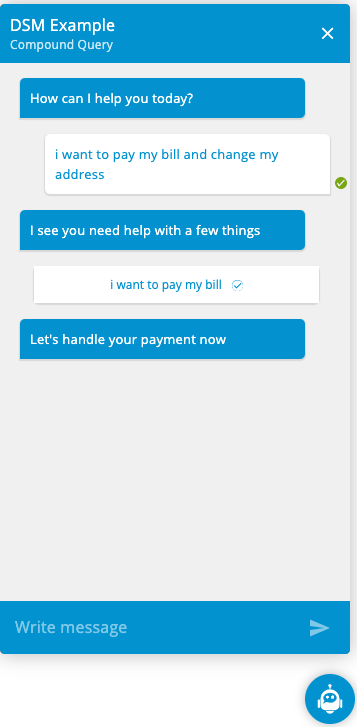

Manual Selection Mode

- The VA will assign the best suited bot for each individual utterance that it identifies.

- The user will be presented with markup containing the identified individual utterances. The markup the user sees is determined by the MarkupType used in the UserSelectionConfig.

- The user can then select the individual utterance they wish to action or select “None of the above”.

- If the user selects one of the individual utterance, the VA will begin communication with the bot that is associated with the selected individual utterance by forwarding the utterance to the bot.

- If the user selects None of the above or types instead of selecting one of the presented options, then the OnSelectedNone actions will be executed if they are present in the UserSelectionConfig.

Here is a sample of what the options look like when MANUAL mode is being used with a ButtonPromptContainer, before and after user selection.

In both modes, the VA will not attempt to identify compound utterances if the VA is already handling a compound utterance.

Sentiment Recognition

This pipeline step will attempt to detect whether the user input is ‘Positive’ or ‘Negative’ based on the AFINN word list and Emoji Sentiment Ranking.

Sample Sentiment Recognition Config

{

"Name": "SentimentRecogVA",

"Bots": [

{

"Id": "botty",

"Type": "BOTARMY",

"Enabled": true,

"Properties": {

"Name": "botty"

}

}

],

"NluEngines": [

{

"Id": "Dispatcher",

"Type": "BOTARMY",

"Properties": {

"Nlp": "ServisBOT"

}

}

],

"IngressPipeline": [

{

"Id": "PositiveSentiment",

"Type": "SENTIMENT_RECOGNITION",

"Properties": {

"SentimentType": "Positive",

"Threshold": "0",

"CustomWords": [

{

"Word": "cats",

"Score": 5

},

{

"Word": "heck",

"Score": -5

}

],

"OnSentimentDetectedActions": [

{

"Type": "SEND_MESSAGE",

"Properties": {

"Message": "I am happy you are enjoying your ServisBOT experience"

}

}

]

}

}

],

"EgressPipeline": [],

"Events": {},

"Persona": "RefundBOT",

"Description": "A va for me",

"Tags": []

}

Properties:

-

SentimentType: ‘Positive|Negative’ - This decides how we check to perform actions in the OnSentimentDetectedActions list.

- When this value is Positive we perform actions if the comparative score is above the Threshold.

- When the value is Negative, we perform actions if the comparative scores is below the Threshold.

-

Threshold: ‘Number between -1 and 1 - This is the threshold which we use to decide to perform actions or not.

-

CustomWords:

[ { "Word": "The Word to classify", "Score": "Number between -5 and 5 to give the word" } ]This provides a way to add custom words or overwrite the scores of existing words in the word list.

-

OnSentimentDetectedActions: ‘List of botnet actions’ - These are the actions to perform if the threshold is met. Currently only SendMessage and SendMarkup are supported.

Context

The result of the sentiment analysis is stored in context here:

msg.payload.state.input.sentiment

{

"sentiment": {

"score": 7,

"comparative": 0.4666666666666668,

"threshold": "0",

"result": "Positive"

"calculation": [

{

"cats": 5

},

{

"like": 2

}

],

"tokens": [

"i",

"like",

"cats"

],

"words": [

"cats",

"like"

],

"positive": [

"cats",

"like"

],

"negative": []

}

}

Properties

-

score - The sum of all the recognized words sentiment scores.

-

comparative - The final score of the sentiment recognition it is a value between -1 and 1.

The comparative score is derived from the sum of all the recognized word’s sentiment scores divided by the total number of words in the utterance. This equation will give a result between -5 and 5. We then normalize this number to return between -1 and 1. Where -1 is the most negative result and 1 is the most positive.

-

result - can have the value

PositiveorNegative. It is based on the comparative score and threshold.- If the comparative score is greater than the threshold the result will be

Positive - If the comparative score is less than the threshold the result will be

Negative

- If the comparative score is greater than the threshold the result will be

-

threshold - The Threshold set in the pipeline step configuration

-

calculation - A list of recognized words with their AFINN/Custom score.

-

tokens - All the tokens like words or emojis found in the utterance.

-

words - List of words from the utterance that were found in AFINN/Custom list.

-

positive - List of positive words from the utterance that were found in AFINN/Custom list.

-

negative - List of negative words from the utterance that were found in AFINN/Custom list.

Examples

Conditional Fulfillment based on Sentiment

Take the following pipeline on a VA or Enhanced Bot.

"IngressPipeline": [

{

"Id": "PositiveSentiment",

"Type": "SENTIMENT_RECOGNITION",

"Properties": {

"SentimentType": "Positive",

"Threshold": ".05",

"CustomWords": [],

"OnSentimentDetectedActions": []

}

}

]

Along with this intent on a bot configured in the VA or Enhanced Bot.

{

"alias": "support",

"displayName": "support",

"utterances": [

{

"text": "help",

"enabled": true

},

{

"text": "support",

"enabled": true

}

],

"detection": {

"actions": []

},

"slots": [],

"scope": "private",

"fulfilment": {

"actions": [

{

"type": "message",

"value": "I can help you. ",

"condition": "state.input.sentiment.result === \"Positive\""

},

{

"type": "message",

"value": "Get a better attitude and I'll help",

"condition": "state.input.sentiment.result === \"Negative\""

}

]

},

"errors": []

}

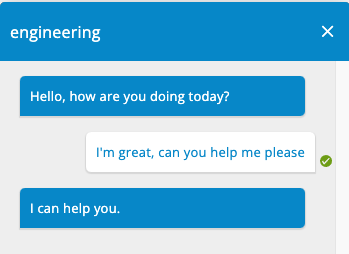

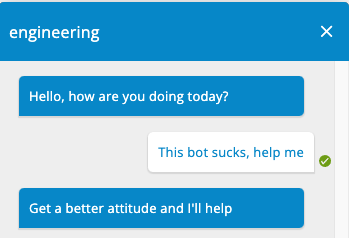

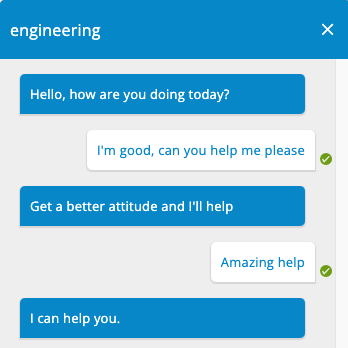

With this configuration, when this intent is hit, how the bot will respond is based on the detected sentiment from the user input.

This response is based on the value of state.input.sentiment.result in the conversations context.

It is important to note that if your threshold value is very negative or very positive it may result in some undesired behavior. For instance, if the threshold on the sentiment pipeline step is set to ‘.5’, naturally positive utterances will result in a Negative result.

"IngressPipeline": [

{

"Id": "PositiveSentiment",

"Type": "SENTIMENT_RECOGNITION",

"Properties": {

"SentimentType": "Positive",

"Threshold": ".5",

"CustomWords": [],

"OnSentimentDetectedActions": []

}

}

]

Assign Bot

You can configure the pipeline to assign a bot using the following config.

NOTE the bot needs to be part of the VA

{

"IngressPipeline": [

{

"Id": "referenceId",

"Type": "ASSIGN_BOT",

"Properties": {

"BotName": "fallback"

}

}

]

}

Handling Missed Input with OpenAI

You can send missed inputs to OpenAI by using an ASSIGN_BOT step within the virtual assistant’s @MissedInput pipeline, and assigning a bot which contains a llm-worker that is configured to communicate with OpenAI.

Once the OpenAI based bot handles the missed input, the bot will return control to the virtual assistant, subsequent user messages will go through the normal virtual assistant process with OpenAI only being used in the case where a virtual assistant can not handle the user input.